When I work with teams to help them improve their quality and automated testing practices, I’m surprised by how often people get worked up about terminology. “What’s Test-Driven Development?” “What’s Unit Testing?” “What’s Integration Testing?” Etc. etc. etc. Those devs aren’t wrong – there are some differences. But rather than getting freaked out about terminology, I’d rather that the teams focus on what needs to be achieved. Remember that the point is to deliver high-quality, done, working applications.

Maslow’s Testing Hierarchy of Needs

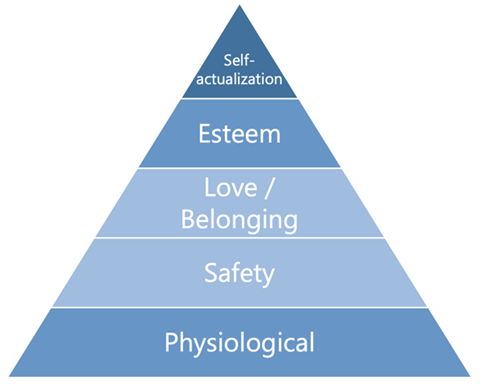

Way back in 1943, a psychologist named Abraham Maslow came up with an idea that he named the Hierarchy of Needs. It’s a way of thinking about what makes humans happy and productive. At the base of the hierarchy, you’ve got basic, physiological needs like food, water, shelter, sleep and from there moves through Safety, Love / Belonging, Esteem, and finally Self-actualization. It takes a lot to check all the boxes in the hierarchy but a way to think of it is that – starting from the bottom – the more boxes you satisfy, the happier you’ll be. But you need to work your way up from the bottom because (for example) even if everyone loves you (level 3: yes), it’s hard to feel great if you’re starving and homeless (level 1: no).

Maslow's Hierarchy of Needs

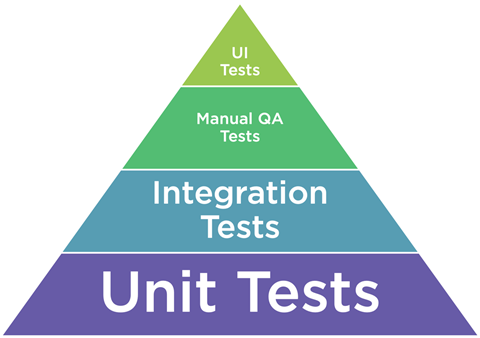

Now what does this have to do with testing? Well, I think there’s a testing hierarchy of needs. Where Maslow is thinking about happiness, the goal with the Testing Hierarchy of Needs is to know if our application is working.

Automated Testing Hierarchy of Needs

There are different ways of knowing if your application is working and different types of tests to get you there. Some of them take more effort or time than others but there is one Big Important Thing to remember: our goal is to know if our application is working.

So all those terms that people talk about like unit tests, test-driven development, integration test, functional tests, behavior-driven development, UI testing, and on and on and on – all those are just ways of knowing if your application is working. Just like there are many paths to happiness, there are many paths to knowing if your application is working.

Testing Speed, Testing Effort, Testing Efficiency, and Testing ROI

As developers, our job is to deliver high-quality, done, working software. That development work takes time. And you know what? Delivering that software takes time, too.

Our employers usually have the goal of us actually delivering software so that they can charge their customers for its use and/or enable them to get some kind of value from using the stuff that we’ve written.

Software that is developed is interesting. Software that’s delivered and being used is valuable.

What often goes unstated is that while our employers might want us to deliver that software as fast as possible, they don’t want us to deliver garbage. The stuff we write has to – ya know – uhhh – actually work. Testing helps us to get to that point of confidence that we feel comfortable shipping the software and releasing it into the world.

We’re probably never going to release a product that is bug-free. There’s almost always a defect somewhere in the code. We just want to make sure that it’s not a world-ender of a defect. There’s a tension between endless testing in order to find EVERY possible defect versus testing enough to feel confident that we can ship.

Usually, the biggest pressure is time. “Hurry up and ship it!” The faster you can get to your level of quality confidence so that you can ship, the sooner that you can release your code.

Tests run by humans tend to be slow because a human can only do one thing at a time. Humans can use their creativity to find bugs that might be lurking a little bit outside of the defined scope of their test script. Those exploratory tests that a human might run are really valuable but they take a long time. That human’s time is precious. Tests run by a computer (automated tests) tend to run a lot faster than human-based testing. But computers lack creativity so if something isn’t part of the automated test code, it’s not going to get picked up. Also, those automated tests have be written by SOMEONE and that someone is usually a developer.

Pros and Cons of Automated Tests & Human / Manual Tests

| Automated Testing | Human / Manual Testing | ||

| Pros | Cons | Pros | Cons |

| * Run quickly by a computer * Fantastic return on investment. Write them once, then run them many, many times per day for years * Help you to maintain your application because the checks become part of the code | * The testing scripts have to be written * The test code has to be maintained * Computers can’t creatively find bugs that aren’t checked for by the test * You’ve got to be precise when you write the test scripts | * Uses human creativity * Doesn’t require an exact, painfully precise script to be written * Humans can find bugs creatively – exploratory testing | * Humans are slow * Humans can only do one thing at a time * Humans make mistakes * Human time is valuable and you have to worry about whether this is the best use of their time * Every time you run the test a human has to be there to run it |

The pros and cons of automated testing and human / manual QA testing

Automated Test vs. Unit Test

Any test that can run without human involvement (except for the effort of starting the test) is what I’d call an “Automated Test”. It’s run by a computer. You’ve described what the test is and how it works using code and that code gets run against your application. That’s an automated test.

Unit tests, integration tests, user interface tests (selenium, protractor, Coded UI) -- these are all types of automated tests. What they do and how they work is a different story.

What is a Unit Test?

A unit test is a piece of test code that exercises and validates a piece of code in your application. In my Hierarchy of Testing Needs, unit tests are at the bottom but this isn’t because I think they’re not valuable, I think that they’re THE MOST IMPORTANT type of types with the biggest return on investment (ROI).

The goal with a unit test is to check a very small piece of functionality in your application in order to see if that application code works. Ideally, that code is tested in isolation without any dependencies. For example, if you’re testing validation logic, I’d like to hope that your application code is written so that you don’t have to deploy your billing database at the same time in order to get your automated test to run. I’d like to hope that you don’t have to deploy your database for any of your unit tests.

Minimizing the required deployed dependencies helps you to:

- quickly isolate the causes of any failures

- have your tests run quickly

- keep your tests focused only on what is necessary for the tests (and not the stuff that’s required to make the dependencies happy)

Unit Tests vs. Integration Tests

Lots of developers think of unit tests and integration tests as being pretty much the same thing. And they are pretty much the same thing...but not exactly the same thing. The difference is the goal. Unit tests test small pieces of functionality in isolation without dependencies. Integration tests test pieces of functionality WITH dependencies. By necessity, integration tests almost always test more logic at one time. They’re almost always more difficult to set up because you have to set up the primary test case plus any of the dependencies for that test case. So while a unit test probably (hopefully) doesn’t call into a database, it’s quite common for an integration test to call into a database.

Unit Testing vs. Test-Driven Development (TDD) vs. Test-First Development

What is Test-Driven Development (TDD)? Is it a type of test? No. TDD is a way of approaching the effort of writing automated tests. Now what is Test-First Development? In my mind, test-first is just another name for TDD.

The idea with TDD is that you write your test code before you write your application code. That’s it. TDD just says that you write your test code before your application code and that idea can start religious wars in the software development world.

Why do Test-Driven Development (TDD)?

When I’m working with a team, I just want them to write automated tests. That’s my first wish. After that, I try to help them get better at writing automated tests. Some automated tests are better than no automated tests. While I might like unit tests more than integration tests, I’d rather have some integration tests rather than no automated tests at all.

So. TDD. Why bother? Writing your tests first does actually help you to write better tests. It forces you to think out your application code just a little bit more than if you just jumped in and started writing that code.

You always want to start with a failing automated test. So you write your test code and it probably won’t compile and it certainly won't work because there isn’t an implementation. Then you start writing the implementation and running the test code. Write a little bit. Run the tests. Fail. Write a little bit more. Run the tests. Fail. Write a little bit more. Run the test. Pass! Yay! It’s done!

When you’re done, I almost always find that the code that I’ve written is cleaner and easier to read if I wrote the test first. Additionally, if I write my test code first and start with a failing automated test, I know that I'm actually writing a decent test. If you write a test and it passes before you’ve written the code that its validating, that’s just suspicious...and probably wrong.

Summary

Don’t get too worried about what stuff means. Try to write automated tests. Try to write your automated tests so that they don’t have any dependencies (aka. Unit tests). Write a lot of unit tests. Then if you’ve got time, write integration tests. Try to follow test-driven development (TDD) and write your test code before your application code. But if you don’t do that, don’t worry.

Just write automated tests. They’ll make your life easier and happier.

-Ben