If you've decided that you need to do a full migration to Azure DevOps Services (aka. The Azure DevOps Cloud), there's a tool for migration. And a guide. A 60 page guide. And then there's the documentation.

Let's face it, migrating your on-premise Team Foundation Server or Azure DevOps Server to the cloud isn't exactly a piece of cake. Even if you download and read the documentation, there's still a lot to wrap your head around.

Hopefully, this post will give you a feel for what the steps are. BTW, if you're still trying to decide if a full migration is worth your effort, check out this post on how to decide the best Azure DevOps migration approach.

The 40,000 Foot Overview (aka. The Summary of the Summary)

Here's the summary of the summary:

- Identity migration and user data decisions related to Azure Active Directory

- Download the migration tool

- Use the migration tool to validate your Team Project Collection (TPC)

- Fix any validation errors and re-validate (repeat)

- Use the migration tool to prepare your Team Project Collection (TPC)

- Upload your data

- Use the migration tool to import your data to Azure DevOps Services

- Enjoy

Simple, right? Let's talk about this in some more detail.

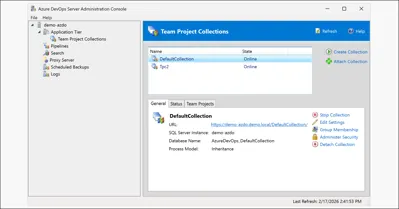

Team Project Collections (TPC) and Azure DevOps Services

In the on-premise version of Azure DevOps Server and Team Foundation Server, there's a structural concept called a Team Project Collection (TPC). Notice that I'm saying Team Project Collection rather than just Team Project.

A Team Project is the container for your stuff. When you create it, you choose which work item process template you're going to start with and what you'd like to use as your default version control system. After that you fill it up with all your stuff.

Well, when you created that Team Project, there was a slightly hidden detail -- that detail was where your new Team Project data gets stored. That storage location is the Team Project Collection (TPC). It's a container for a bunch of related Team Projects. Under the surface, it's a database in SQL Server and each TPC gets its own SQL Server database.

Thankfully, this "which TPC?" detail is largely hidden from most users so there's a good chance that you only have one TPC. For migration purposes, having just a single TPC is much easier. If you have multiple Team Project Collections, your migration to the cloud is about to get more complicated.

The reason for why it gets more complicated is because of how on-premise data gets migrated into the cloud. Each TPC gets migrated into its own tenant (aka. "account") in the Azure DevOps cloud. Let's say your company name is AwesomeCorp. You're probably thinking that you'll migrate your Azure DevOps Server to something like https://dev.azure.com/awesomecorp. AwesomeCorp would be your ideal account name. If you only have one TPC you're fine.

But let's say that you have two TPCs: Development and Operations. Since each migrated TPC gets its own account that means that you'll probably end up with something like "AwesomeCorpDevelopment" and "AwesomeCorpOperations" as your account names in Azure DevOps. That means two URLs for your stuff:

- https://dev.azure.com/AwesomeCorpDevelopment

- https://dev.azure.com/AwesomeCorpOperations

Probably not the end of the world, but you'll also probably be paying for some things twice. Plus, you'll need to do the migration process (essentially) twice because the migration tool works on one TPC at a time.

If you only want to have one Azure DevOps account in the cloud, you'll need to figure out how to join your TPCs before you start the migration process.

Identity, Active Directory, and Azure Active Directory

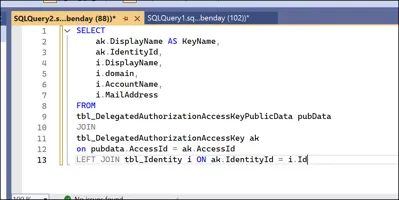

Everything that you and your employees and coworkers have done in your Azure DevOps Server or Team Foundation Server has been logged and stored according to their identity. This is part of what's great about AZDO and TFS -- you want to know who did what. Every file added, every change made, every build run, every test case passed or failed -- that's all in the database somewhere tied to a user's identity.

That identity was almost definitely governed by Active Directory. The users log in to their machines, that machine was probably joined to an Active Directory Domain, and that Domain was the source of truth for the user identity.

When you move to the cloud you're going to be using Azure Active Directory (AAD) for your identity rather than Active Directory (AD). AAD provides similar services to your on-prem AD but the implementation is quite different. When your data gets imported to Azure DevOps Services, the import tool is going to make sure that it can map your user data from AD to the user data stored in AAD.

Getting this done properly is super important because you only have one chance to get this right. If the user identity mapping didn't work when you imported, you're stuck with that data as-is. Users that aren't mapped/mappable during import get stored as "historical". You'll still be able to see all that data but if that data won't be tied to a user account anymore.

Active Directory to Azure Active Directory Synchronization

Your first big thing to figure out is how you're going to synchronize your on-premise Active Directory Domain to your Azure Active Directory tenant in the cloud. If your company is already using Office 365 for your email and for SharePoint, this synchronization issue has almost definitely already been taken care of for you. If that's the case, you're done and you can move on.

If it hasn't been set up, this is the first thing you're going to need to do. If your Active Directory Domain structure is simple and straightforward and not filled up with a bunch of old junk, then getting this sync process working should be pretty quick -- a couple of hours or a couple of days. If your AD Domain is complex, it could take much longer.

There's a tool from Microsoft Azure that will do the synchronization called Azure AD Connect. You'll need to set that up and schedule it to run periodic synchronizations to/from the cloud.

Validate. Fix. Re-validate.

The Azure DevOps data import migration tool from Microsoft has 3 big features: validate, prepare, and import.

The validate step looks at your Team Project data and checks to make sure that it can be imported into the cloud. The validation errors are almost always going to be from the work item tracking system. Work item tracking means all the features related to project planning, requirements management, defect tracking, and QA testing.

This can be easy or this can be super painful. It all depends on how much you customized your work item type definitions. If you didn't customize your work items or you only made minimal changes, you're probably in good shape.

Changes that will probably be fine:

- Adding one or more additional state values to a work item type

- Adding one or more additional field(s) to a work item type

- Adding one or more new, custom work item types

Changes that will probably cause problems:

- Deleting or renaming any of the default work item types

- Removing any of the default work item type state values

- Using lots of validation rules and/or state transition rules in your work item types

- Using custom control types in your work item types

- Messing with the configuration files for your Team Projects such as the categories, process config files

Another thing that could cause you validation pain is if you have old Team Projects that you haven't kept up to date as you upgraded your server. Over the years, Team Foundation Server gotten some significant feature improvements. If you upgraded your server regularly over that time, your Team Projects probably got upgraded, too. If you didn't do that, you might need to go and manually update those features.

Another source of migration concern is related to automated builds and automated releases. If you have any really old builds like the MSBuild-based builds from the TFS2005-ish days you'll need to upgrade them. Same thing for any of the XAML-based builds from the TFS2010-ish time frame. Either of those technologies are going to give you validation errors and warnings because they've been deprecated and/or removed from the product.

The basic process is that you run validate, make changes to address the validation errors, and then repeat this process until the validation passes.

Big Databases versus Little Databases

One of the things that will happen during your validation efforts is that the tool will tell you how you'll need to import your data. If your database is smaller than about 30GB, you'll be able to import your data using a dacpac file. A dacpac is a type of backup that contains the schema and data in a format that's readable using Microsoft's SqlPackage.exe tool. If you can use the dacpac import, your import process is a little simpler.

If you database is over about 30GB, you'll either get a warning saying that a dacpac import is not recommended or an error saying that your data is so big that dacpac import is not supported.

If you can use a dacpac, you'll upload your data (the dacpac) to Azure Storage and your data will be imported from there.

If your data is too large, you'll need to create a virtual machine in Azure that's running SQL Server. You'll restore your data onto that SQL Server and then when you do your import, the data will be imported from that server.

Three things to think about if you have to go the SQL Server VM route:

- The first very big and very important thing is that you need to be absolutely certain that you've created this VM in the same Azure region as the Azure DevOps region that you'll be importing to. If you're in the US, you'll almost definitely be importing to Azure DevOps Services running in the Central US region. That means that you'll need to create your virtual machine in the Central US region, too.

- The second thing to think about -- well, remember -- is that as soon as your done with the Azure DevOps import, you can delete this VM. You do not need to keep this VM running indefinitely.

- Importing using SQL Azure is not supported. I get this question from time to time from IT organizations and the answer is no. Not supported.

The Prepare Step

If you've gotten this far in the process, you're getting really close to being done. Once your TPC is passing validation, you'll run the prepare command in the migration tool. This prepares your TPC database for import and creates some configuration files that will be used by the import.

Pro Tip: The person who runs the prepare has to be the same person who runs the import.

One of the config files created by the prepare tool is called import.json. You'll need to open this file in a text editor and populate it with some crucial details such as is this a 'dry run' (aka. test import), what account name do you want to use, and where is the data being imported from.

Backup Your Database

Once you've done the prepare, you'll need to backup your Team Project Collection database. IT MUST BE DONE IN A CERTAIN WAY!!!!

- Go to the Azure DevOps Admin Console app on your app tier machine

- Go to the Team Project Collections section of the app

- Stop the Team Project Collection you're importing

- Detach the Team Project Collection your importing

- Backup the database

It's Step 4 where people go wrong. They stop the TPC but they don't detach it from Azure DevOps. You've GOT TO DETACH IT before you do the backup.

Detach first. Then backup. (If you don't do it like this, it will fail.)

Once you've detached, then you can do either the dacpac backup or the SQL Server backup per the recommendations of the migrator tool.

If you're doing a trial migration ('dry run'), you can now reattach and restart your TPC. If you're doing a production migration right now, don't attach and restart the TPC because users might add data to the database that won't get migrated.

Upload to the Cloud

Once you have your database backup, upload it to the cloud -- either to Azure Storage if it's a small database or to your SQL Server VM if it's a large database.

Import

You're ready to go. All you need to do now is to run the data migrator's import step. And then wait. Probably wait for a while.

Remember: the prepare step and the import step need to be run by the same person.

After the import has started, you'll get an email with a link to view the status of your import. It'll probably take a while.

Enjoy

Hopefully your import completed successfully. Now's the time to enjoy your Azure DevOps data. That was probably a lot of work. Maybe you should consider a vacation. Or asking your boss for a raise. Or maybe a bonus. Heck! Ask for a bonus AND a raise. That import was no joke.

Summary

So that's the overview of the Azure DevOps data import process. As you can see, there are a lot of things to think about.

I hope this helped.

-Ben

-- Looking for help with your Azure DevOps or Team Foundation Server migration to the cloud? We can help. Drop us a line at info@benday.com