If you're working in Azure DevOps using Git and want to have code reviews as part of your development process, feature branches and Pull Requests are a great idea. You'll probably also want to set up some branch policies that will run some automated builds and do some basic code validation.

So which build pipeline definition should that be? Should it be the same one that deploys everything to production? Or should you have a separate build pipeline definition just to validate the pull requests?

In short: one YAML Pipeline to rule them all or two YAML Pipeline definitions? There are some pros and cons to each approach and in this article, I'm going to talk you through the approaches.

Ultimately, I think you'll want to have two YAML Pipelines and use YAML Templates to reduce code duplication.

BTW, big thanks to my friend Mickey Gousset for his input on this topic. He's got a lot of great videos on YAML and GitHub Actions up on YouTube that you need to watch.

Pull Requests, Branch Policies, and YAML Build Pipelines

First, let's think about the main branch in your Git repository. It's the default branch and that's where you'll do your releases to production from. Any code that is going to go to production has to make it to this branch. You'll probably also do your QA testing work from that main branch, too.

If you want to do code reviews using Pull Requests, you'll need to create a Git branch for any feature development work that you do. All the changes (commits) related to a feature will go in that branch and when the feature is ready to move toward production (development is complete), you'll create a pull request that will move those changes from the feature branch to the main branch.

If you're confused by the term "pull request", I'm not surprised. Again and again and again, I think it would make lots more sense if it were called a "merge request". But I digress.

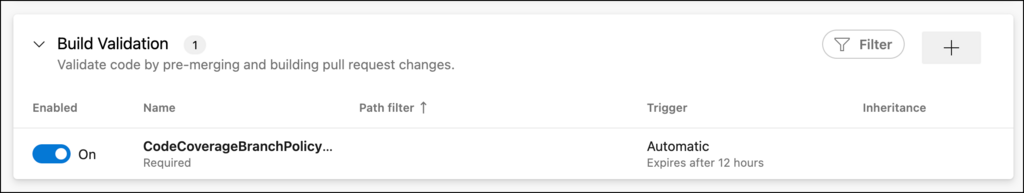

In order to streamline and manage this pull request process, you're probably going to set up a bunch of rules in Azure DevOps called Branch Policies. The most straightforward branch policy is that a certain automated build needs to run and pass before the feature branch code can be merged to main. Essentially, this makes sure that what you're doing doesn't "break the build".

A Build Validation branch policy in Azure DevOps

It's that build validation branch policy that starts to make life a little confusing. In software we have it constantly drilled into our heads that we shouldn't have duplicate code. It's the "DRY Principle" that says Don't Repeat Yourself. So following the DRY Principle, we should strive to have only one YAML Build Pipeline, right? But if our Pipeline is used to move code from Dev to QA to Production, wouldn't using that pipeline to validate Pull Requests cause a gigantic mess?

What's the right answer?

Why is One YAML Pipeline for Everything So Hard to Do?

I'm a company of one. For my website and for the apps that I write for my company, I rarely ever use Pull Requests. I mean, I wrote code and I'm the person who would approve the PR so what would be the point? So for the stuff that I do, I have just one YAML Pipeline in Azure DevOps that compiles the code, tests the code, deploys to a QA environment in Azure, and then optionally deploys to my production resources in Azure.

That YAML pipeline is pretty complex and includes deploying database changes, lookup data, and configuration data in addition to deploying the application bits. It's complex but this is what I create for my DevOps customers and those customers probably have dozens if not hundreds of developers contributing to those repos. The complexity in that deployment pipeline is a good thing.

There's a lot of build and validation logic that happens in that pipeline that's probably pretty complicated and "fiddly". Wouldn't I want to use that logic to validate my Pull Requests?

Well, yes. You would. But that deployment pipeline probably has multiple YAML stages in it (build, deploy to test, integration testing, deploy to production, etc) and not all of those are relevant for your Pull Request validation work.

Think about the code that is being validated by the Pull Request for a second. That code is largely untested when you're in the process of validating and deciding about that Pull Request. The Pull Request that you're working is A LOT of the work that you'd do as part of the validation. Plus it's not integrated with the rest of your code base. So if you use your production deployment YAML pipeline to validate the PR, you probably would want to make some changes to keep that PR from being deployed to QA or Production.

Yah. That's getting complicated.

Two YAML Pipelines or One YAML Pipelines with Conditional Logic?

If you wanted to stick with one YAML pipeline that did production deployments plus pull request validation, you can do it. You'll just have to know when a pipeline is being executed from a Pull Request and when it's being executed for production deployments. Once you figure that out, you'd put conditions on the stages of the YAML pipeline.

In theory, that probably doesn't add that much complexity to the YAML script and you wouldn't have to worry about code duplication for the build/test stuff. In reality, that complexity will probably drive you crazy because it will almost definitely require you to spend a bunch of time debugging and you will almost certainly mess it up at least once.

That starts to push us towards the idea of having two pipelines: one for production deployments and one for pull request validations.

Pros & Cons for One Big Pipeline

| Pro | Con |

|---|---|

| - One build to rule them all |

- Don't repeat yourself

- No duplication

- Theoretically, easy to understand | - If your pipeline does deploys to multiple environments (dev, qa, stage, production), you're almost certainly not going to want to deploy your PR branch to an important environment as part of the validation

- Adding conditionals to the stages in the pipeline to decide what/whether/where to deploy can get complicated

- Harder to understand. You need to know what the build does when it's run against Main vs run against a PR feature branch. This might cause pain when you have to debug the pipelines

- PR validation executions can clutter your pipeline status views. This makes it hard to tell what has been deployed to which environments (especially prod) |

Pros & Cons for Two Pipelines: Deployment & PR Validation

| Pro | Con |

|---|---|

| - Super easy to understand. Keeps the goals of the pipelines separated. |

- Keeps the deployment pipeline status view clean. Easy to see deployment status for the application. No PR validation clutter. | - You have multiple YAML pipelines that sort of do the same thing

- If there's an important validation, you need to make sure it appears in both places |

YAML Templates to the Rescue

I think that having two separate YAML templates is probably the right way to go. One for production deployments off of the Main branch and one for Pull Request validation. That means that you have to worry about code duplication in your YAML Pipeline definitions.

The way to handle this code duplication is to use YAML Templates in Azure DevOps. Templates allow you to extract a portion of your pipeline logic into a separate file in version control and then reference that template file from other pipelines.

Let's say you have a fairly simple bit of logic for building and unit testing a .NET Core application that you want to use in your PR pipeline and your deployment pipeline.

azure-pipelines.yml (original):

trigger:

- main

pool:

name: Default

variables:

buildConfiguration: 'Release'

steps:

- script: dotnet build --configuration $(buildConfiguration)

displayName: 'dotnet build $(buildConfiguration)'

- task: DotNetCoreCLI@2

displayName: run unit tests

inputs:

command: 'test'

projects: '**/HelloWorld.Tests.csproj'

arguments: '--collect:"Code Coverage"'

- script: dotnet publish --configuration $(buildConfiguration) -o $(build.artifactStagingDirectory)

displayName: 'dotnet publish $(buildConfiguration)'

- task: PublishPipelineArtifact@1

inputs:

targetPath: '$(build.artifactStagingDirectory)'

artifact: 'drop'

publishLocation: 'pipeline'

From here, we can now extract this logic into a new file called Build.yml

build.yml:

parameters:

- name: buildConfiguration

type: string

default: 'Debug'

steps:

- script: echo parameters->buildConfiguration is ${{ parameters.buildConfiguration }}

- script: dotnet build --configuration ${{ parameters.buildConfiguration }}

displayName: 'dotnet build ${{ parameters.buildConfiguration }}'

- task: DotNetCoreCLI@2

displayName: run unit tests

inputs:

command: 'test'

projects: '**/HelloWorld.Tests.csproj'

arguments: --collect:"Code Coverage"

- script: dotnet publish --configuration ${{ parameters.buildConfiguration }} -o $(build.artifactStagingDirectory)

displayName: 'dotnet publish ${{ parameters.buildConfiguration }}'

- task: PublishPipelineArtifact@1

inputs:

targetPath: '$(build.artifactStagingDirectory)'

artifact: 'drop'

publishLocation: 'pipeline'

The big change in build.yml is that we've introduced a Parameters section at the beginning. This is where we'll collect any values that need to be passed in to our YAML template. In this case, it'll be the build configuration (Debug, Release, etc).

azure-pipelines.yml (refactored to use templates):

trigger:

- main

pool:

# vmImage: ubuntu-latest

name: Default

variables:

buildConfiguration: 'Release'

steps:

- template: build.yml

parameters:

buildConfiguration: $(buildConfiguration)

In the azure-pipelines.yml file, we'll completely replace the contents of the build stage with a single step that calls the template.

From here, you can create a YAML file for pull request validation called "pull-requests.yml" and then have that file call the template. Your azure-pipelines.yml continues to function as the "deploy to production" pipeline file but there's no problems with code duplication because they both reference the same template for the build logic.

Now in your repository branch policies, you'll have a build policy that points to the pull-requests.yml pipeline. That pipeline is now a lot simpler and only handles the basics that are required for PR validation.

Consider using Templates to Separate Each Stage

Thinking about the typical complexity of the production deployment YAML pipeline, it can be a maintenance nightmare. Looking at one of the ones I use a lot for one of my apps, the YAML pipeline script is almost 400 lines long. That's a lot of stuff.

It might be worth it to refactor each stage in the YAML pipeline into its own template file. For example, something like "build.yml", "deploy-to-test.yml", "manual-approval.yml", and "deploy-to-production.yml". Even if you don't re-use those files, having them split out might make them easier to maintain.

Summary

In Azure DevOps, deciding how many YAML Pipelines you should have can get a little challenging especially when you have pull requests and production deployments to worry about.

A great way to simplify your YAML life without introducing code duplication is to start using YAML templates. Put the logic/steps that need to be shared between your deployment and PR validation pipelines into YAML templates and then call those template from your YAML pipelines.

I want to thank my friend Mickey Gousset for his input on this topic. He's got a lot of great videos on YAML and GitHub Actions up on YouTube that you need to watch. Go watch his videos!

I hope this helps.

-Ben

-- Looking for help with YAML Pipelines in Azure DevOps? Need to migrate your old build definitions from JSON or XAML to YAML? Not sure how to automate and streamline your pull request workflow? We can help. Drop us a line at info@benday.com.